Motivation

Be forewarned that this article will have a long diatribe that is boring to everybody reading it, it certainly was boring to write it.

Computers have always been magic to me. I’ve always enjoyed using and programming them, but often had a very vague idea of how they actually worked. This is one reason that I’ve been driven to learn more about hardware. I wanted to go from magic to knowledge. The last step in that process is making my own computer. I’d like to say I’ve always wanted to make my own computer, but that would be a lie (like the cake, but I digress).

I will say that I’ve always been fascinated with the story of Woz and the Apple II. In fact, I wrote a book report on that development back in 4th grade, but I’ll not embarrass myself by recounting that any further. What was surprising is that this was not an uncommon thing to do back in the day. In fact, if one wanted a computer one would get some memory chips, a CPU, and hack together a computer—hence the homebrew computer club of Palo Alto.

With the revolution of cheap hardware and hobbyist hardware becoming more popular, homebrew computers are once again becoming commonplace. Some of my favorites are the Kiwi Computer, the BMOW 1, and Veronica. In fact, my recent attendance of the Hackaday anniversary in Pasadena where Quinn Dunki spoke was rather inspiring, because I thought, “I’m a software person like her, I can do it too.”

But by and far my favorite project is the Magic-1 by Bill Buzbee. This project is great on many levels. It’s a homebrew CPU made out of discrete logic chips. When I saw this years ago, I thought, “that dude is nuts.” Now that I’ve done more hardware I’m like, “that dude is cool.” However, what is more impressive about his project is that he did the whole toolchain, assembler, C compiler, linker, loader, VM, minix port. He really sought to understand the whole hardware/software stack, and that I think rounds out the educational value of such an endeavor.

At the end of the day, the reason everybody is doing these projects is to learn more. Knuth, in his book “Things a Computer Scientist Rarely Talks About,” makes the case that what typifies a computer scientist is the ability to move between alternative levels of abstraction rapidly and fluidly while still preserving a mental awareness of the other levels at all time. I would agree this is an essential trait of any great computer scientist and probably any engineer. One could also argue it is an important trait of any successful CEO or national leader. The ability to balance and understand minutiae while tracking the big and mid-size pictures is essential and difficult. Obviously to this process, having deep knowledge of as many levels of the computation hierarchy is essential.

Goals

Thus, my goal is to make a homebrew computer and its software. The main design goals are:

- FPGA based – I want to use a FPGA, because I don’t really desire to use discrete 74 series logic. I also don’t want to be limited to existing processors.

- A custom instruction set – Designing an ISA is tricky, involving balancing resources and computational needs. Furthermore, it will necessitate a custom toolchain involving at least a custom assembler. I’d like to use a fixed size instruction and be vaguely RISC like for simplicity.

- 16 bit addresses, 16 bit datapath – I’d really like to avoid a lot of limitations, so the temptation to go 32 bit is there, but I’m not sure it will fit on a small FPGA like I am using.

- A VGA display – I want to be able to generate graphics. Now that I’ve done my FPGA VGA character generator, this should be pretty easy. I hope to aim for Amiga level graphics (32 colors at once using a 5 bitplanes), each palette entry chooses 12-bit color (4096 colors). Maybe we’ll even do hold and modify! I will extend my 3 bit color to be 12 bit color by using a simple R-2R resistor ladder.

- SRAM memory – I am planning to use 128k asynchronous SRAM chips that support 12ns which will allow a clock of 83Mhz. This will avoid having to build a cache hierarchy. I eventually would like to have a virtual memory system and support larger memory on the system. In that case I’ll probably add a DRAM, use the SRAM as an L2 cache or for VRAM solely. In any case, I can simulate dual ported RAM at a fast enough speed to do video draw.

- Simple IO peripherals – I will start with a simple serial link to the computer and a PS2 port for keyboard interfacing.

- Integrated PCB – I would like to build a PCB that contains all components besides the main FPGA development board. It will attach to the FPGA development board through 4 female headers that plug into the male headers on the EP2C5 development board.

Stretch goals:

- More IO – SD card interfacing for self-hosting of programs. I would also like ethernet, or wifi for internet connectivity.

- C compiler – retarget LCC or clang

- Real OS – port minix?

- Full PCB – a self-contained fully custom integrated board. Ditch the FPGA development board. This would require soldering some surface mount components for the first time. Yay!

Instruction Set

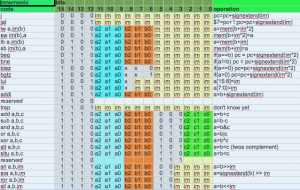

Now that we’ve done a lot of talking let’s look at the instruction set that I’ve settled on. It is MIPS-like, it has 8 registers. This is tight, much tighter than I would have liked. This gets into the start of tradeoffs. I want a fixed instruction size, this makes lots of things easier (PC increment, word fetches are always aligned, etc.). I need to keep the immediate sizes big enough to be practical but ensure there are enough registers to avoid lots of stack work. This is definitely not optimal, and instruction set crappyness is one of the reasons why I’m considering going 32 bit. Anyways here it is:

I’ll mention that it took a lot of iteration to get to this point. One of the main things was opcode encoding. I will say this is definitely not optimal. After I had done this I looked the Magic-1 page and Bill had talked about how he would do his encoding. One thing I really liked was always putting the destination as the last register (in the low bits), because that will allow a lot of custom decode logic I have to be handled the same for all operand formats.

I’ll mention that it took a lot of iteration to get to this point. One of the main things was opcode encoding. I will say this is definitely not optimal. After I had done this I looked the Magic-1 page and Bill had talked about how he would do his encoding. One thing I really liked was always putting the destination as the last register (in the low bits), because that will allow a lot of custom decode logic I have to be handled the same for all operand formats.

Another thing to note is that I don’t really have any instructions for turning on and off interrupts. I also don’t have a multiply instruction (no room). I had wanted to put floating point instructions in too, but, again, no room. I also don’t have every combination of logical comparison. I also do not have any flag registers, so if you are doing multi-word arithmetic you need to use the compare instructions first to get the carry or borrow you will need later.

Also, I decided to think a little bit about register conventions so that I could make proper call conventions. Here’s what I came up with:

- $0 – always 0

- $1 – arg0 and return value (caller saved)

- $2 – arg1 (caller saved)

- $3 – temporary (caller saved)

- $4 – callee saved

- $5 – callee saved

- $6 – stack pointer

- $7 – link pointer

The best way to check if an instruction set is good or not is to try to write some assembly. So here’s a routine to loop from 10 to 0 and create a sum

ori $1,$0,10 # counter

or $2,$0,$0 # sum

L0:

add $2,$2,$1

addi $1,$1,-1

bne $0,$1,L0

Not bad. I actually went further and wrote a few routines to manage a character buffer. I included a puts to print zero terminated strings, a put_number to print unsigned integers (which uses a manual divide by 10 routine).

Assembler/Disassembler

Now that I have the instruction set, I need to be able to generate machine code from assembler. To write the assembler I chose Python, a decision I now regret for a number of reasons. The main advantage was parsing was relatively easy and it was fast to get running. The main thing that is not so great is that I can’t plug it into my C++ based simulator as easy as I would like (and, Yes, I know you can embed python in a C++ program).

I decided to do a very simple two-pass assembler to get started. The main difficulty in assemblers is you don’t know where your labels will be in memory a-priori. A two-pass assembler makes a first pass to figure out that and then comes back and assembles everything (or you can assemble everything and then fixup the offsets in the second pass). If we assemble the snippit above we get this hex:

$ python ../asm.py test.s 0xb2 0x0a 0xe4 0x18 0xe4 0x81 0xb2 0x7f 0x70 0x7d

On pass 1 I parse and tokenize the assembly. I ignore comments, but I keep track of the current address in memory I will be assembling to. This address can be changed by the .org directive. Since my instructions are fixed length, I only need to add 2 bytes to the address after each instruction. Whenever I hit an instruction I store its tokens away in a list followed by the destination the instruction will go to. I also remember in a hash table where each label maps. Then I do a second pass that actually assembles by dispatching using a python dict that maps from mnemonic to a function that assembles that mnemonic.

At the same time I wrote a disassembler so that I could verify that the assembler was working properly. For example, if we disassemble the above we get

0x0000: b2 0a : addi $1,$0,10

0x0002: e4 18 : or $2,$0,$0

0x0004: e4 81 : add $2,$2,$1

0x0006: b2 7f : addi $1,$1,-1

0x0008: 70 7d : bne $0,$1,-6 (0x0004)

A simulator — kicking the tires

To really test this I needed to make sure I could write code. Making the actual processor seemed a little bit to bite off, so instead I made a C++ implemented behavioral simulator for the processor. This turned out to be good. I’ll talk a bit more about that and show how it works.